Android跨进程渲染-HardwareBuffer

图片流交换是非常常见的需求,为了保证程序的健壮性,可以将这些步骤分为各个进程分别处理,防止中间步骤错误导致主进程crash从而降低用户体验。比如人脸识别、场景识别、直播等场景,这些图片数据可能要经过很多个步骤流转,在各步骤中执行格式转换、图层叠加、图片畸变、颜色矫正等,比如直播中常见的特效和相机图片叠加。

在查阅资料过程中,发现网上相应的资料较少。对我非常有帮助的是一位博主Robot 9写的《基于 HardwareBuffer 实现 Android 多进程渲染》,但我不太想使用aidl来实现跨进程,于是我研究一段时间之后,决定使用Socket+HardwareBuffer来完成跨进程渲染。

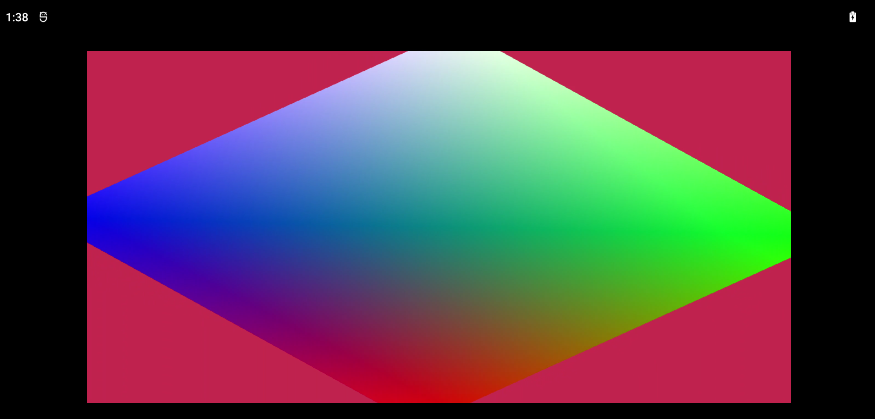

实现的效果

为什么是HardwareBuffer

HardwareBuffer支持Android 8.0(API 级别 26)及以上版本,底层实现就是GraphicBuffer。

- 跨进程共享内存。该机制允许各个进程之间数据共享,不需要耗费性能的内存拷贝。

- 支持RGB、YUV等常见格式,因此支持相机等数据高效传输。

- 对OpenGL、Vulkan等图形API原生支持,可以和常见图形API直接集成,不需要耗费性能的

glReadpixels()这些API。

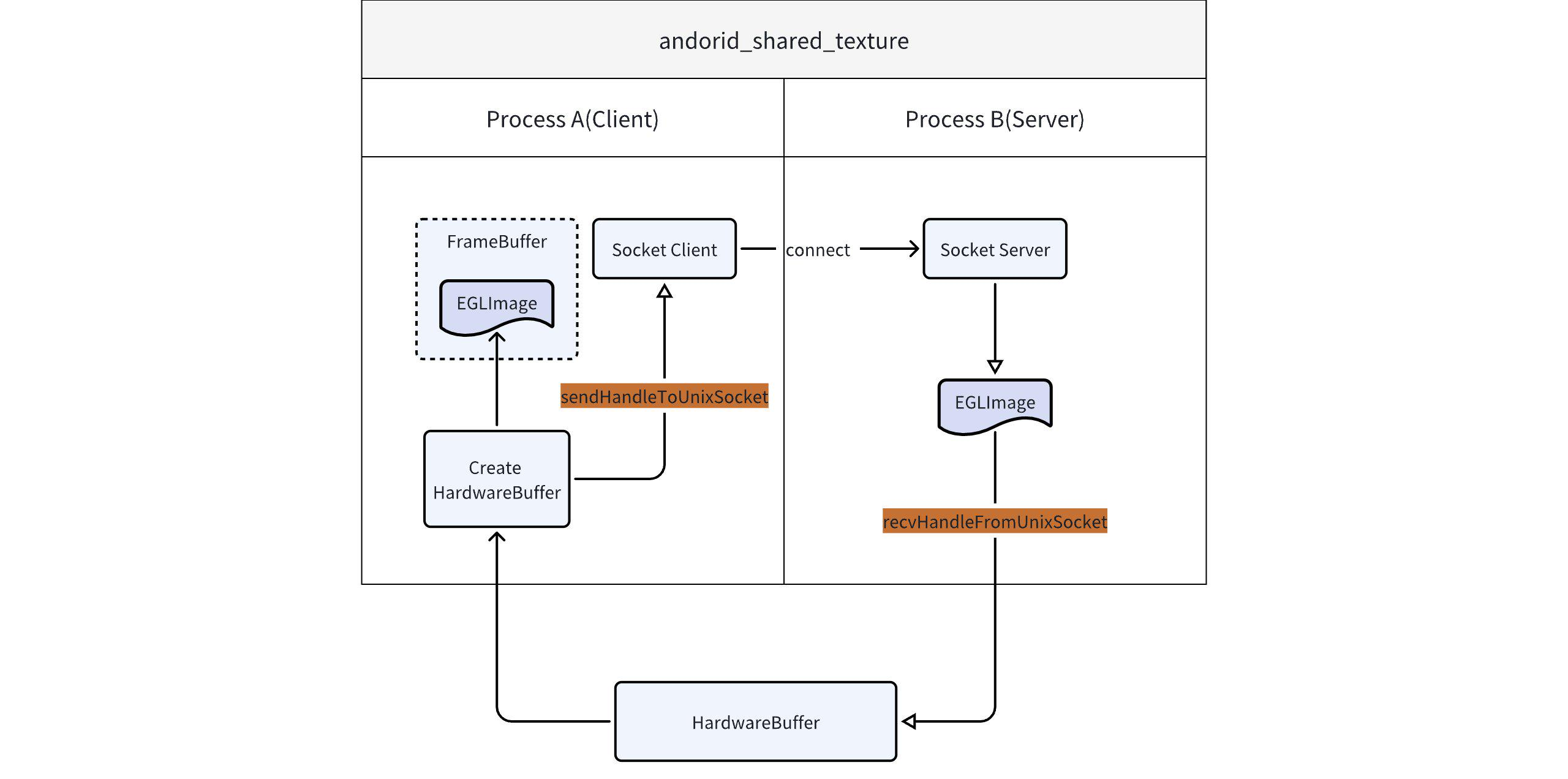

案例示意

简要描述:

- 案例分为两个进程:

RendererClient客户端和RendererServer服务端,客户端和服务的渲染都是用OpenGLES,后续再附上Vulkan作为服务端渲染的实现。

xxxx:/ # ps -ef | grep com.example.Renderer

u0_a83 9645 612 28 01:37:17 ? 00:00:11 com.example.RendererServer

u0_a84 9736 612 17 01:37:23 ? 00:00:06 com.example.RendererClient

root 9949 9433 13 01:37:58 pts/3 00:00:00 grep com.example.Renderer

- 首先服务端启动Socket等待连接,然后在启动客户端时执行连接。

- 初始化客户端,创建HardwareBuffer,然后将HardwareBuffer关联到EGLImage,还要将该EGLImage作为一个附件挂载到一个帧缓冲FrameBuffer上。

- 通过

AHardwareBuffer_sendHandleToUnixSocket()将HardwareBuffer发送给服务端。 - 服务端接收到HardwareBuffer后,停止等待,并根据该HardwareBuffer初始化自己的EGLImage,然后将该EGLImage作为贴图绘制到四边形中,完成显示。

- 上述步骤完成后,就通过HardwareBuffer把客户端和服务端的两个EGLImage关联起来了,后续客户端直接将虚拟场景渲染到帧缓冲中即可。

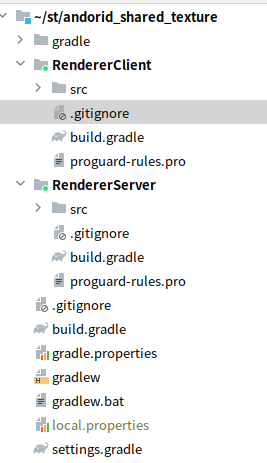

完整代码:https://gitee.com/xingchen0085/andorid_shared_texture

实现步骤

项目初始结构

使用gradle管理两个Module,RendererServer和RendererClient。

服务端Socket

在android_app的onCmdHandler中创建一个Socket线程,设置Socket Server。

// Main.cpp

int socketFd = -1; // 全局变量

int dataSocket = -1; // 全局变量

void cmd_handler(struct android_app *app, int32_t cmd){

switch (cmd) {

case APP_CMD_INIT_WINDOW: { // ANativeWindow init

LOG_D(" APP_CMD_INIT_WINDOW");

if(socketFd < 0){

pthread_t serverThread;

pthread_create(&serverThread, nullptr, SetupServer, nullptr);

}

break;

}

...

...

}

}

SocketServer实现。

// Main.cpp

void* SetupServer(void* obj){

int ret;

struct sockaddr_un serverAddr;

char socketName[108];

LOG_I("Start server setup");

socketFd = socket(AF_UNIX, SOCK_STREAM, 0);

if(socketFd < 0){

LOG_E("socket: %s", strerror(errno));

exit(EXIT_FAILURE);

}

LOG_D("socket made.");

memcpy(&socketName[0], "\0", 1);

strcpy(&socketName[1], SOCKET_NAME);

memset(&serverAddr, 0, sizeof(struct sockaddr_un));

serverAddr.sun_family = AF_UNIX;

strncpy(serverAddr.sun_path, socketName, sizeof(serverAddr.sun_path) - 1); // max is 108

ret = bind(socketFd, reinterpret_cast<const sockaddr *>(&serverAddr), sizeof(struct sockaddr_un));

if(ret < 0){

LOG_E("bind: %s", strerror(errno));

exit(EXIT_FAILURE);

}

LOG_D("bind made");

// open 8 back buffers for this demo

ret = listen(socketFd, 8);

if(ret < 0){

LOG_E("listen: %s", strerror(errno));

exit(EXIT_FAILURE);

}

LOG_I("Setup Server complete.");

while (1){

// accept

dataSocket = accept(socketFd, nullptr, nullptr);

LOG_I("accept dataSocket: %d", dataSocket);

if(dataSocket < 0){

LOG_E("accept: %s", strerror(errno));

break;

}

while (1){

ret = AHardwareBuffer_recvHandleFromUnixSocket(dataSocket, &hwBuffer);

if(ret != 0){

LOG_E("recvHandleFromUnixSocket: %d", ret);

break;

}

AHardwareBuffer_Desc desc;

AHardwareBuffer_describe(hwBuffer, &desc);

LOG_D("recvHandleFromUnixSocket: %d x %d, layer: %d, format: %d", desc.width, desc.height, desc.layers, desc.format);

}

}

LOG_D("Close dataSocket");

close(dataSocket);

return nullptr;

}

客户端Socket

在android_app的onCmdHandler中连接Socket Server。

// Main.cpp

int dataSocket = -1; // 全局变量

void cmd_handler(struct android_app *app, int32_t cmd){

switch (cmd) {

case APP_CMD_INIT_WINDOW: { // ANativeWindow init

LOG_D(" APP_CMD_INIT_WINDOW");

if(dataSocket < 0){

SetupClient();

...

...

}

break;

}

...

...

}

}

连接Socket的实现。

// Main.cpp

void SetupClient(){

char socketName[108];

struct sockaddr_un serverAddr;

dataSocket = socket(AF_UNIX, SOCK_STREAM, 0);

if(dataSocket < 0){

LOG_E("socket: %s", strerror(errno));

exit(EXIT_FAILURE);

}

memcpy(&socketName[0], "\0", 1);

strcpy(&socketName[1], SOCKET_NAME);

memset(&serverAddr, 0, sizeof(struct sockaddr_un));

serverAddr.sun_family = AF_UNIX;

strncpy(serverAddr.sun_path, socketName, sizeof(serverAddr.sun_path) - 1);

// connect

int ret = connect(dataSocket, reinterpret_cast<const sockaddr *>(&serverAddr), sizeof(struct sockaddr_un));

if(ret < 0){

LOG_E("connect: %s", strerror(errno));

exit(EXIT_FAILURE);

}

LOG_I("Client Setup complete.");

}

客户端创建HardwareBuffer

// Main.cpp

int dataSocket = -1; // 全局变量

void cmd_handler(struct android_app *app, int32_t cmd){

switch (cmd) {

case APP_CMD_INIT_WINDOW: { // ANativeWindow init

LOG_D(" APP_CMD_INIT_WINDOW");

if(dataSocket < 0){

SetupClient();

AHardwareBuffer_Desc hwDesc;

hwDesc.format = AHARDWAREBUFFER_FORMAT_R8G8B8A8_UNORM;

hwDesc.width = 1024;

hwDesc.height = 1024;

hwDesc.layers = 1;

hwDesc.rfu0 = 0;

hwDesc.rfu1 = 0;

hwDesc.stride = 0;

hwDesc.usage = AHARDWAREBUFFER_USAGE_CPU_READ_NEVER | AHARDWAREBUFFER_USAGE_CPU_WRITE_NEVER

| AHARDWAREBUFFER_USAGE_GPU_COLOR_OUTPUT | AHARDWAREBUFFER_USAGE_GPU_SAMPLED_IMAGE;

int rtCode = AHardwareBuffer_allocate(&hwDesc, &hwBuffer);

if(rtCode != 0 || !hwBuffer){

LOG_E("Failed to allocate hardware buffer.");

exit(EXIT_FAILURE);

}

}

break;

}

...

...

}

}

AHardwareBuffer_Desc的几个参数解释:

format: 格式可以是RGB、YUV的格式,我们案例中需要处理的RGBA数据,所以设置为AHARDWAREBUFFER_FORMAT_R8G8B8A8_UNORM。width: 宽度,对应的就是传输的纹理宽度,这里固定为1024;height: 高度,对应的就是传输的纹理高度,这里固定为1024;usage:用途,这几个参数意味着这个HardwareBuffer不提供CPU读写,只给GPU作为颜色附件或作为贴图使用。

客户端创建EGLImage和帧缓冲

创建一个ClientRenderer

#pragma once

#include <cstdint>

#include <string>

#include <vector>

#include <android/asset_manager.h>

#include <EGL/egl.h>

#define EGL_EGLEXT_PROTOTYPES

#define GL_GLEXT_PROTOTYPES

#include <EGL/eglext.h>

#include <GLES2/gl2.h>

#include <GLES2/gl2ext.h>

#include <GLES2/gl2platform.h>

#include <android/hardware_buffer.h>

class ClientRenderer{

public:

static ClientRenderer* GetInstance();

void Init(AHardwareBuffer *hwBuffer, int dataSocket);

void Destroy();

void Draw();

AAssetManager *m_NativeAssetManager;

struct android_app *m_GlobalApp;

private:

ClientRenderer() = default;

int InitEGLEnv();

void DestroyEGLEnv();

void CreateProgram();

int ReadShader(const char *fileName, std::vector<char> &source) const;

static ClientRenderer s_Renderer;

EGLDisplay m_EglDisplay = EGL_NO_DISPLAY;

EGLSurface m_EglSurface = EGL_NO_SURFACE;

EGLContext m_EglContext = EGL_NO_CONTEXT;

EGLConfig m_EglConfig;

GLint m_VertexShader;

GLint m_FragShader;

GLuint m_Program;

uint32_t m_ImgWidth = 1024;

uint32_t m_ImgHeight = 1024;

GLuint m_OutputFBO;

GLuint m_OutputTexture;

EGLImageKHR m_NativeBufferImage;

};

实现OpenGLES和EGL的环境配置

//ClientRenderer.cpp

int ClientRenderer::InitEGLEnv() {

const EGLint confAttr[] = {

EGL_RENDERABLE_TYPE, EGL_OPENGL_ES3_BIT_KHR,

EGL_SURFACE_TYPE, EGL_WINDOW_BIT,

EGL_RED_SIZE, 8,

EGL_GREEN_SIZE, 8,

EGL_BLUE_SIZE, 8,

EGL_ALPHA_SIZE, EGL_DONT_CARE,

EGL_DEPTH_SIZE, EGL_DONT_CARE,

EGL_STENCIL_SIZE, EGL_DONT_CARE,

EGL_NONE

};

// EGL context attributes

const EGLint ctxAttr[] = {

EGL_CONTEXT_CLIENT_VERSION, 2,

EGL_NONE

};

EGLint eglMajVers, eglMinVers;

EGLint numConfigs;

int resultCode = 0;

do {

m_EglDisplay = eglGetDisplay(EGL_DEFAULT_DISPLAY);

if(m_EglDisplay == EGL_NO_DISPLAY) {

//Unable to open connection to local windowing system

LOG_E("BgRender::CreateGlesEnv Unable to open connection to local windowing system");

resultCode = -1;

break;

}

if(!eglInitialize(m_EglDisplay, &eglMajVers, &eglMinVers)) {

// Unable to initialize EGL. Handle and recover

LOG_E("BgRender::CreateGlesEnv Unable to initialize EGL");

resultCode = -1;

break;

}

LOG_I("BgRender::CreateGlesEnv EGL init with version %d.%d", eglMajVers, eglMinVers);

if(!eglChooseConfig(m_EglDisplay, confAttr, &m_EglConfig, 1, &numConfigs)) {

LOG_E("BgRender::CreateGlesEnv some config is wrong");

resultCode = -1;

break;

}

m_EglSurface = eglCreateWindowSurface(m_EglDisplay, m_EglConfig, m_GlobalApp->window, nullptr);

if(m_EglSurface == EGL_NO_SURFACE) {

switch(eglGetError()) {

case EGL_BAD_ALLOC:

// Not enough resources available. Handle and recover

LOG_E("BgRender::CreateGlesEnv Not enough resources available");

break;

case EGL_BAD_CONFIG:

// Verify that provided EGLConfig is valid

LOG_E("BgRender::CreateGlesEnv provided EGLConfig is invalid");

break;

case EGL_BAD_PARAMETER:

// Verify that the EGL_WIDTH and EGL_HEIGHT are

// non-negative values

LOG_E("BgRender::CreateGlesEnv provided EGL_WIDTH and EGL_HEIGHT is invalid");

break;

case EGL_BAD_MATCH:

// Check window and EGLConfig attributes to determine

// compatibility and pbuffer-texture parameters

LOG_E("BgRender::CreateGlesEnv Check window and EGLConfig attributes");

break;

}

}

m_EglContext = eglCreateContext(m_EglDisplay, m_EglConfig, EGL_NO_CONTEXT, ctxAttr);

if(m_EglContext == EGL_NO_CONTEXT) {

EGLint error = eglGetError();

if(error == EGL_BAD_CONFIG) {

// Handle error and recover

LOG_E("BgRender::CreateGlesEnv EGL_BAD_CONFIG");

resultCode = -1;

break;

}

}

if(!eglMakeCurrent(m_EglDisplay, m_EglSurface, m_EglSurface, m_EglContext)) {

LOG_E("BgRender::CreateGlesEnv MakeCurrent failed");

resultCode = -1;

break;

}

LOG_E("BgRender::CreateGlesEnv initialize success!");

} while (false);

if (resultCode != 0){

LOG_E("BgRender::CreateGlesEnv fail");

}

return resultCode;

}

初始化着色器

// client.vert

#version 300 es

layout(location=0) in vec2 a_position;

layout(location=1) in vec4 a_color;

layout(location=2) in vec2 a_texcoord;

uniform mat4 modelMat;

out vec4 v_color;

out vec2 v_texcoord;

void main(){

gl_Position = modelMat * vec4(a_position, 0.0, 1.0);

v_color = a_color;

v_texcoord = a_texcoord;

}

//client.frag

#version 300 es

precision mediump float;

in vec4 v_color;

in vec2 v_texcoord;

out vec4 FragColor;

void main() {

FragColor = v_color;

}

创建Program,具体实现略过。

void ClientRenderer::CreateProgram() {

std::vector<char> vsSource, fsSource;

if(ReadShader("shaders/client.vert", vsSource) < 0){

LOG_E("read shader error.");

return;

}

if(ReadShader("shaders/client.frag", fsSource) < 0){

LOG_E("read shader error.");

return;

}

m_VertexShader = CreateGLShader(std::string(vsSource.data(), vsSource.size()).data(), GL_VERTEX_SHADER);

m_FragShader = CreateGLShader(std::string(fsSource.data(), fsSource.size()).data(), GL_FRAGMENT_SHADER);

m_Program = CreateGLProgram(m_VertexShader, m_FragShader);

}

初始化帧缓冲和EGLImage

//ClientRenderer.cpp

void ClientRenderer::Init(AHardwareBuffer *hwBuffer, int dataSocket) {

DEBUG_LOG();

if(InitEGLEnv() != 0) return;

CreateProgram();

/////////////////////////////////////////////////////////////////////////////////////////////

// Main point: FrameBuffer & EGLImage //

/////////////////////////////////////////////////////////////////////////////////////////////

glGenFramebuffers(1, &m_OutputFBO);

glBindFramebuffer(GL_FRAMEBUFFER, m_OutputFBO);

glGenTextures(1, &m_OutputTexture);

glBindTexture(GL_TEXTURE_2D, m_OutputTexture);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_R, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, m_ImgWidth, m_ImgHeight, 0, GL_RGBA, GL_UNSIGNED_BYTE, 0);

glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_2D, m_OutputTexture, 0);

uint32_t fboStatus = glCheckFramebufferStatus(GL_FRAMEBUFFER);

if(fboStatus != GL_FRAMEBUFFER_COMPLETE){

LOG_E("func %s, line %d, OpenGL renderFramebuffer NOT COMPLETE: %d", __func__, __LINE__, fboStatus);

}

// 1、HardwareBuffer ---> EGLClientBuffer

EGLClientBuffer clientBuffer = eglGetNativeClientBufferANDROID(hwBuffer);

EGLint eglImageAttributes[] = { EGL_IMAGE_PRESERVED_KHR, EGL_TRUE, EGL_NONE };

m_NativeBufferImage = eglCreateImageKHR(m_EglDisplay, EGL_NO_CONTEXT, EGL_NATIVE_BUFFER_ANDROID, clientBuffer, eglImageAttributes);

glBindTexture(GL_TEXTURE_2D, m_OutputTexture);

glEGLImageTargetTexture2DOES(GL_TEXTURE_2D, m_NativeBufferImage);

glBindTexture(GL_TEXTURE_2D, 0);

glBindFramebuffer(GL_FRAMEBUFFER, 0);

// 2、sendHandleToUnixSocket

AHardwareBuffer_sendHandleToUnixSocket(hwBuffer, dataSocket);

}

除了常规的创建OpenGLES帧缓冲外,这里的重点有两个:

-

将HardwareBuffer和EGLImage关联,直接与GPU交互,不需要内存拷贝。

EGLClientBuffer clientBuffer = eglGetNativeClientBufferANDROID(hwBuffer); EGLint eglImageAttributes[] = { EGL_IMAGE_PRESERVED_KHR, EGL_TRUE, EGL_NONE }; m_NativeBufferImage = eglCreateImageKHR(m_EglDisplay, EGL_NO_CONTEXT, EGL_NATIVE_BUFFER_ANDROID, clientBuffer, eglImageAttributes); -

通过

sendHandleToUnixSocket将HardwareBuffer传输给SocketServer,SocketServer通过下边的 服务端获取HardwareBuffer 这一步骤获取Buffer。

客户端渲染

客户端渲染比较简单,就是渲染一个彩色四边形,随着时间围绕Z轴旋转。

//ClientRenderer.cpp

//#define RENDER_TO_SCREEN

void ClientRenderer::Draw() {

glUseProgram(m_Program);

{

float x_scale = 0.8f;

float y_scale = 0.8f;

GLfloat vertices[] = {

-1.f * x_scale, -1.f * y_scale,

1.f * x_scale, -1.f * y_scale,

-1.f * x_scale, 1.f * y_scale,

1.f * x_scale, 1.f * y_scale,

};

GLfloat colors[] = {

1.f, 0.f, 0.f, 1.f,

0.f, 1.f, 0.f, 1.f,

0.f, 0.f, 1.f, 1.f,

1.f, 1.f, 1.f, 1.f

};

GLfloat texCoords[] = {

0.0f, 0.0f,

1.0f, 0.0f,

0.0f, 1.0f,

1.0f, 1.0f

};

GLuint posLoc = glGetAttribLocation(m_Program, "a_position");

glEnableVertexAttribArray(posLoc);

glVertexAttribPointer(posLoc, 2, GL_FLOAT, GL_FALSE, 0, vertices);

GLuint colorLoc = glGetAttribLocation(m_Program, "a_color");

glEnableVertexAttribArray(colorLoc);

glVertexAttribPointer(colorLoc, 4, GL_FLOAT, GL_FALSE, 0, colors);

GLuint texcoordLoc = glGetAttribLocation(m_Program, "a_texcoord");

glEnableVertexAttribArray(texcoordLoc);

glVertexAttribPointer(texcoordLoc, 2, GL_FLOAT, GL_FALSE, 0, texCoords);

}

glClearColor(0.1f, 0.2f, 0.3f, 1.f);

glClear(GL_COLOR_BUFFER_BIT);

glViewport(0, 0, m_ImgHeight, m_ImgHeight);

glScissor(0, 0, m_ImgWidth, m_ImgHeight);

#ifndef RENDER_TO_SCREEN

glBindFramebuffer(GL_FRAMEBUFFER, m_OutputFBO);

glViewport(0, 0, m_ImgHeight, m_ImgHeight);

glScissor(0, 0, m_ImgWidth, m_ImgHeight);

#endif

glClearColor(0.8f, 0.2f, 0.3f, 1.f);

glClear(GL_COLOR_BUFFER_BIT);

float timeSec = getTimeSec();

glm::mat4 modeMat = glm::rotate(glm::mat4(1.f), sinf(timeSec), glm::vec3(0, 0, 1));

glUniformMatrix4fv(glGetUniformLocation(m_Program, "modelMat"), 1, GL_FALSE, glm::value_ptr(modeMat));

glDrawArrays(GL_TRIANGLE_STRIP, 0, 4);

#ifndef RENDER_TO_SCREEN

glBindFramebuffer(GL_FRAMEBUFFER, 0);

#endif

glUseProgram(0);

eglSwapBuffers(m_EglDisplay, m_EglSurface);

}

服务端获取HardwareBuffer

在Sever端的等待连接循环中,获取客户端传递的dataSocket,转换为HardwareBuffer。

//Main.cpp

void* SetupServer(void* obj){

...

...

LOG_I("Setup Server complete.");

while (1){

...

...

while (1){

ret = AHardwareBuffer_recvHandleFromUnixSocket(dataSocket, &hwBuffer);

if(ret != 0){

LOG_E("recvHandleFromUnixSocket: %d", ret);

break;

}

AHardwareBuffer_Desc desc;

AHardwareBuffer_describe(hwBuffer, &desc);

LOG_D("recvHandleFromUnixSocket: %d x %d, layer: %d, format: %d", desc.width, desc.height, desc.layers, desc.format);

}

}

...

...

}

服务端渲染

创建一个ServerRenderer

#pragma once

#include <cstdint>

#include <string>

#include <vector>

#include <android/asset_manager.h>

#include <EGL/egl.h>

#define EGL_EGLEXT_PROTOTYPES

#define GL_GLEXT_PROTOTYPES

#include <EGL/eglext.h>

#include <GLES2/gl2.h>

#include <GLES2/gl2ext.h>

#include <GLES2/gl2platform.h>

#include <android/hardware_buffer.h>

class ServerRenderer{

public:

static ServerRenderer* GetInstance();

void Init(AHardwareBuffer *hwBuffer);

void Destroy();

void Draw();

AAssetManager *m_NativeAssetManager;

struct android_app *m_GlobalApp;

private:

ServerRenderer() = default;

uint8_t* ReaderImage(const char *fileName, size_t *outFileLength);

int InitEGLEnv();

void DestroyEGLEnv();

void CreateProgram();

int ReadShader(const char *fileName, std::vector<char> &source) const;

static ServerRenderer s_Renderer;

EGLDisplay m_EglDisplay = EGL_NO_DISPLAY;

EGLSurface m_EglSurface = EGL_NO_SURFACE;

EGLContext m_EglContext = EGL_NO_CONTEXT;

EGLConfig m_EglConfig;

GLint m_VertexShader;

GLint m_FragShader;

GLuint m_Program;

uint32_t m_ImgWidth = 1024;

uint32_t m_ImgHeight = 1024;

GLuint m_InputTexture;

EGLImageKHR m_NativeBufferImage;

};

初始化着色器

//server.vert

#version 300 es

layout(location=0) in vec2 a_position;

layout(location=1) in vec4 a_color;

layout(location=2) in vec2 a_texcoord;

out vec4 v_color;

out vec2 v_texcoord;

void main(){

gl_Position = vec4(a_position, 0.0, 1.0);

v_color = a_color;

v_texcoord = a_texcoord;

}

//server.frag

#version 300 es

precision mediump float;

precision mediump sampler2D;

in vec4 v_color;

in vec2 v_texcoord;

uniform sampler2D tex;

out vec4 FragColor;

void main() {

FragColor = vec4(texture(tex, v_texcoord).rgb, 1.f);

}

接下来初始化OpenGL/EGL、创建Program跟客户端实现一致,略过。

初始化EGLImage

//ServerRenderer.cpp

void ServerRenderer::Init(AHardwareBuffer *hwBuffer) {

DEBUG_LOG();

if(InitEGLEnv() != 0) return;

CreateProgram();

glGenTextures(1, &m_InputTexture);

if(hwBuffer && m_NativeBufferImage == nullptr){

EGLClientBuffer clientBuffer = eglGetNativeClientBufferANDROID(hwBuffer);

EGLint eglImageAttributes[] = { EGL_IMAGE_PRESERVED_KHR, EGL_TRUE, EGL_NONE };

m_NativeBufferImage = eglCreateImageKHR(m_EglDisplay, EGL_NO_CONTEXT, EGL_NATIVE_BUFFER_ANDROID, clientBuffer, eglImageAttributes);

glBindTexture(GL_TEXTURE_2D, m_InputTexture);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_R, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glEGLImageTargetTexture2DOES(GL_TEXTURE_2D, m_NativeBufferImage);

glBindTexture(GL_TEXTURE_2D, 0);

}

}

渲染贴图

//ServerRenderer.cpp

void ServerRenderer::Draw() {

glUseProgram(m_Program);

{

float x_scale = 0.8f;

float y_scale = 0.8f;

GLfloat vertices[] = {

-1.f * x_scale, -1.f * y_scale,

1.f * x_scale, -1.f * y_scale,

-1.f * x_scale, 1.f * y_scale,

1.f * x_scale, 1.f * y_scale,

};

GLfloat colors[] = {

1.f, 0.f, 0.f, 1.f,

0.f, 1.f, 0.f, 1.f,

0.f, 0.f, 1.f, 1.f,

1.f, 1.f, 1.f, 1.f

};

GLfloat texCoords[] = {

0.0f, 0.0f,

1.0f, 0.0f,

0.0f, 1.0f,

1.0f, 1.0f

};

GLuint posLoc = glGetAttribLocation(m_Program, "a_position");

glEnableVertexAttribArray(posLoc);

glVertexAttribPointer(posLoc, 2, GL_FLOAT, GL_FALSE, 0, vertices);

GLuint colorLoc = glGetAttribLocation(m_Program, "a_color");

glEnableVertexAttribArray(colorLoc);

glVertexAttribPointer(colorLoc, 4, GL_FLOAT, GL_FALSE, 0, colors);

GLuint texcoordLoc = glGetAttribLocation(m_Program, "a_texcoord");

glEnableVertexAttribArray(texcoordLoc);

glVertexAttribPointer(texcoordLoc, 2, GL_FLOAT, GL_FALSE, 0, texCoords);

}

glClearColor(0.f, 0.f, 0.f, 1.f);

glClear(GL_COLOR_BUFFER_BIT);

glUniform1i(glGetUniformLocation(m_Program, "tex"), 0);

glActiveTexture(GL_TEXTURE0);

glBindTexture(GL_TEXTURE_2D, m_InputTexture);

glDrawArrays(GL_TRIANGLE_STRIP, 0, 4);

eglSwapBuffers(m_EglDisplay, m_EglSurface);

glUseProgram(0);

}

核心就是将EGLImage作为贴图给到一个四边形做采样,这样就是把客户端渲染内容“贴“上去啦!

运行

- 安装两个APK。

adb install -t -f RendererServer-debug.apk

adb install -t -f RendererClient-debug.apk

- 先运行

RendererServer。

adb shell am start -n com.example.RendererServer/android.app.NativeActivity

这个时候,服务端因为没开始渲染,应该是一个黑屏状态。可以看到👇日志输出:

01:37:31.581 14336-14358 RendererServer D ----------------------------------------------------------------

01:37:31.581 14336-14358 RendererServer D android_main()

01:37:31.582 14336-14336 threaded_app V Start: 0xb40000746cec61f0

01:37:31.582 14336-14358 threaded_app V activityState=10

01:37:31.583 14336-14336 threaded_app V Resume: 0xb40000746cec61f0

01:37:31.583 14336-14358 threaded_app V activityState=11

01:37:31.590 14336-14336 threaded_app V InputQueueCreated: 0xb40000746cec61f0 -- 0xb40000748ce9b560

01:37:31.590 14336-14358 threaded_app V APP_CMD_INPUT_CHANGED

01:37:31.590 14336-14358 threaded_app V Attaching input queue to looper

01:37:31.601 14336-14336 threaded_app V NativeWindowCreated: 0xb40000746cec61f0 -- 0xb40000752cea0a70

01:37:31.601 14336-14358 threaded_app V APP_CMD_INIT_WINDOW

01:37:31.601 14336-14358 RendererServer D APP_CMD_INIT_WINDOW

01:37:31.601 14336-14361 RendererServer I Start server setup

01:37:31.601 14336-14361 RendererServer D socket made.

01:37:31.601 14336-14361 RendererServer D bind made

01:37:31.601 14336-14361 RendererServer I Setup Server complete.

- 再运行

RendererClient

adb shell am start -n com.example.RendererClient/android.app.NativeActivity

可以看到👇日志输出:

01:40:35.691 14853-14877 RendererClient D ----------------------------------------------------------------

01:40:35.691 14853-14877 RendererClient D android_main()

01:40:35.692 14853-14853 threaded_app V Start: 0xb40000746cec61f0

01:40:35.692 14853-14877 threaded_app V activityState=10

01:40:35.693 14853-14853 threaded_app V Resume: 0xb40000746cec61f0

01:40:35.693 14853-14877 threaded_app V activityState=11

01:40:35.700 14853-14853 threaded_app V InputQueueCreated: 0xb40000746cec61f0 -- 0xb40000748ce9b560

01:40:35.700 14853-14877 threaded_app V APP_CMD_INPUT_CHANGED

01:40:35.700 14853-14877 threaded_app V Attaching input queue to looper

01:40:35.714 14853-14853 threaded_app V NativeWindowCreated: 0xb40000746cec61f0 -- 0xb40000752cea0a70

01:40:35.714 14853-14877 threaded_app V APP_CMD_INIT_WINDOW

01:40:35.714 14853-14877 RendererClient D APP_CMD_INIT_WINDOW

01:40:35.715 14853-14877 RendererClient I Client Setup complete.

01:40:35.715 14811-14835 RendererServer I accept dataSocket: 76

这里可以看到Client Setup complete.,表示我们的Socket连接成功。然后在Server端也打出来日志:accept dataSocket: 76,表示已处理完客户端连接。

- 我们切换到RendererServer界面,就可以看到客户端提交的渲染画面了。

日志也打印了服务端获取的HardwareBuffer信息:

RendererServer D recvHandleFromUnixSocket: 1024 x 1024, layer: 1, format: 1

待完善

以下这三点还没有完善,如果大家感兴趣,后续我会添加这些内容。

- 开启和关闭Socket的时机需要完善,目前如果重复进入Client,会黑屏。

- 启动Client后无需切换到Server界面,Server是一个后台进程,直接提供渲染即可。

- HardwareBuffer的创建应在Server端完成,然后传递给客户端。

- Vulkan实现。

参考

- Author: xingchen

- Link: http://www.adiosy.com/posts/android%E8%B7%A8%E8%BF%9B%E7%A8%8B%E6%B8%B2%E6%9F%93-hardwarebuffer.html

- License: This work is under a 知识共享署名-非商业性使用-禁止演绎 4.0 国际许可协议. Kindly fulfill the requirements of the aforementioned License when adapting or creating a derivative of this work.